Persuasive driving assistant

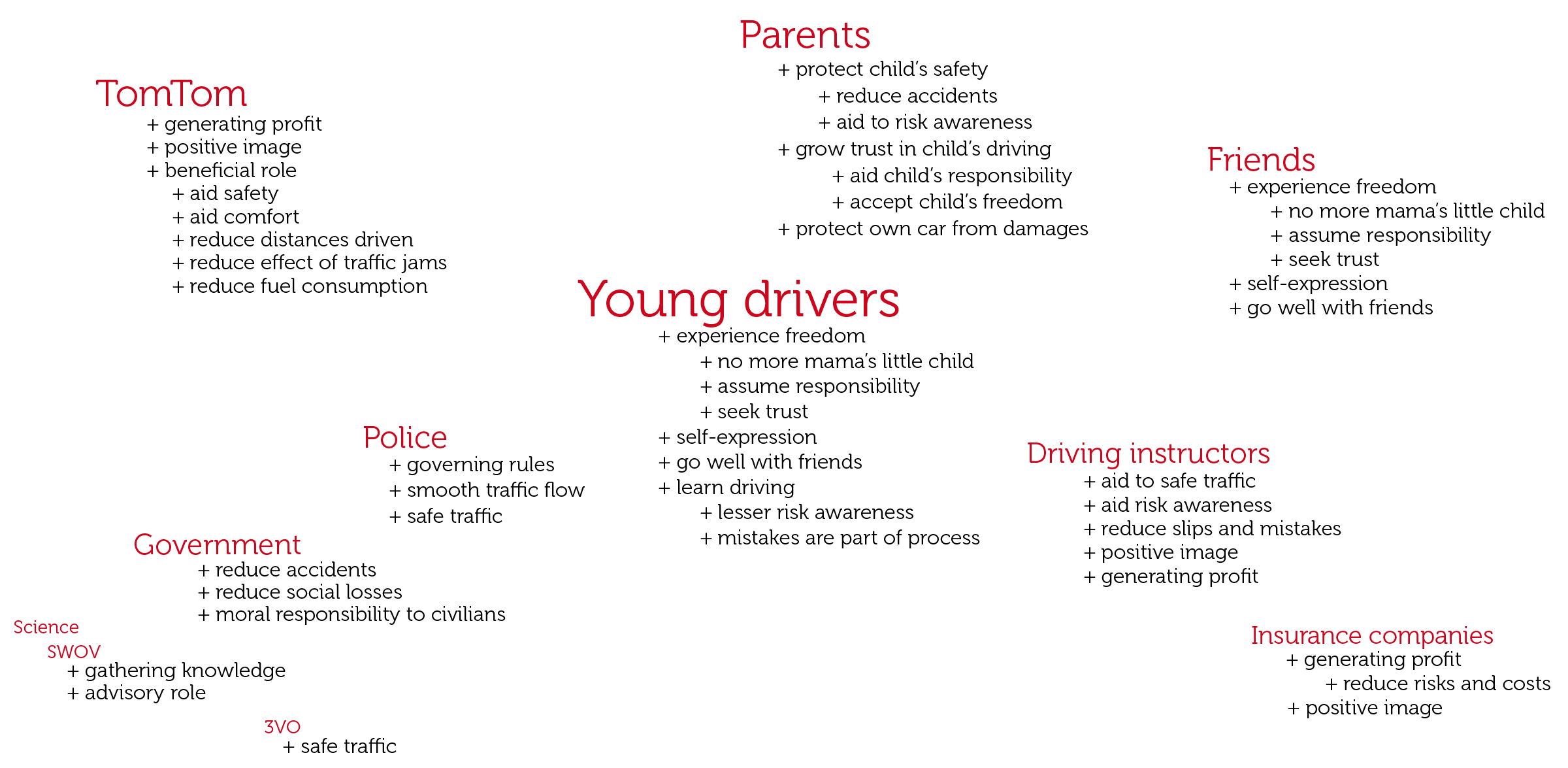

I designed a persuasive robot that influences the driving behaviour of young drivers. Focusing on safety is important for novice drivers, because they face higher risks than other drivers for various reasons. Persuading them to make safer choices while driving could significantly reduce accidents.

Early process: focus on risk awareness

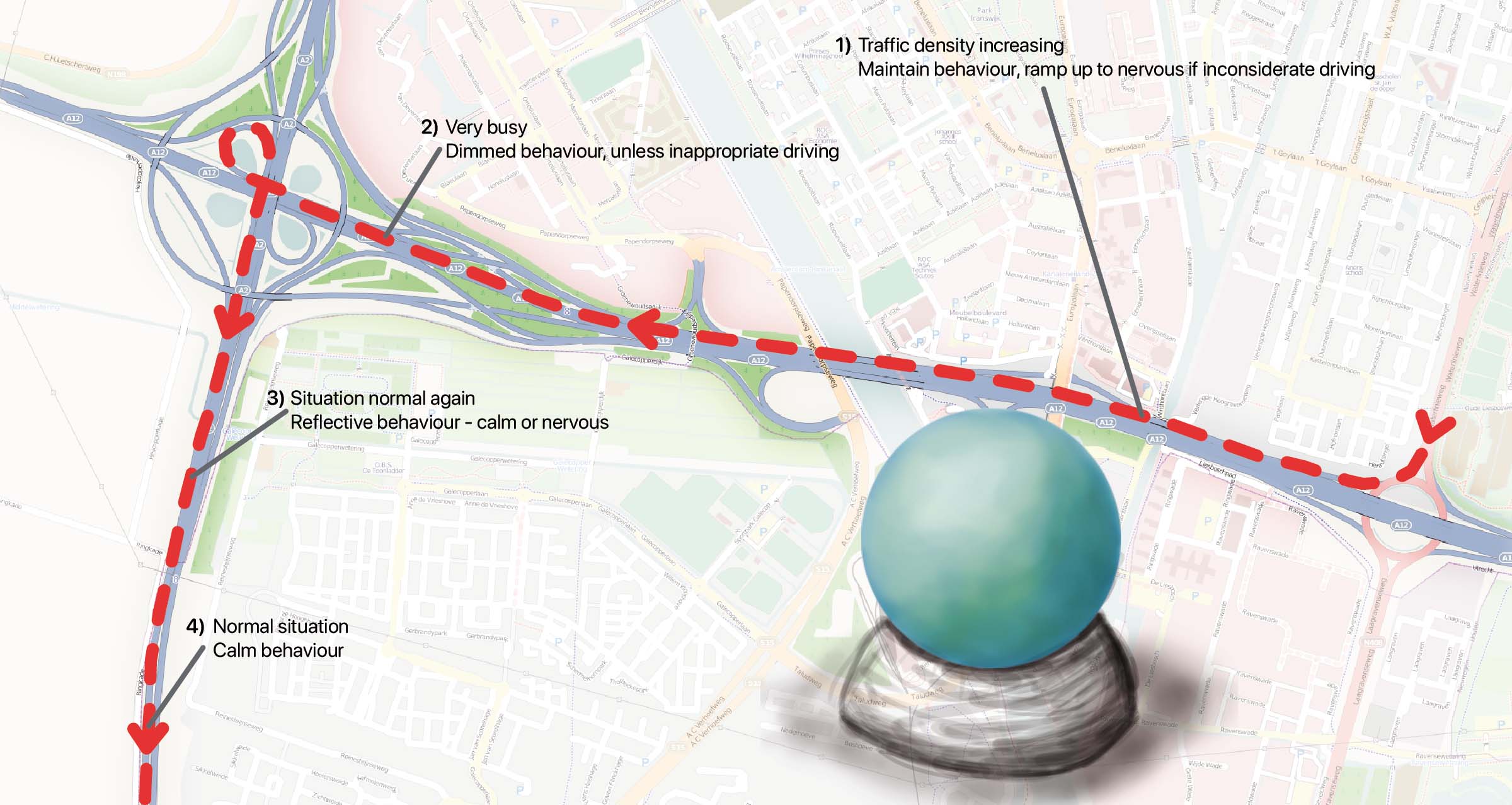

The product should promote safer driving through better understanding of one’s own behaviour. My goal was to advice the driver on the risks taken (or about to be taken) in relation to the ‘real risk’. This real risk should be derived from knowledge of the immediate environment, such the route taken, nearby crossings, speed limits, possibly accident data and current movement data of the vehicle. The advice intends to raise awareness and reflection on behaviour that ultimately leads to safer driving behaviour.

Apart from achieving persuasion, a major challenge is to reach that goal while not alienating users for fear of loss of freedom and independence. For young drivers, independence is a large motivation for driving.

Designing the driving assistant

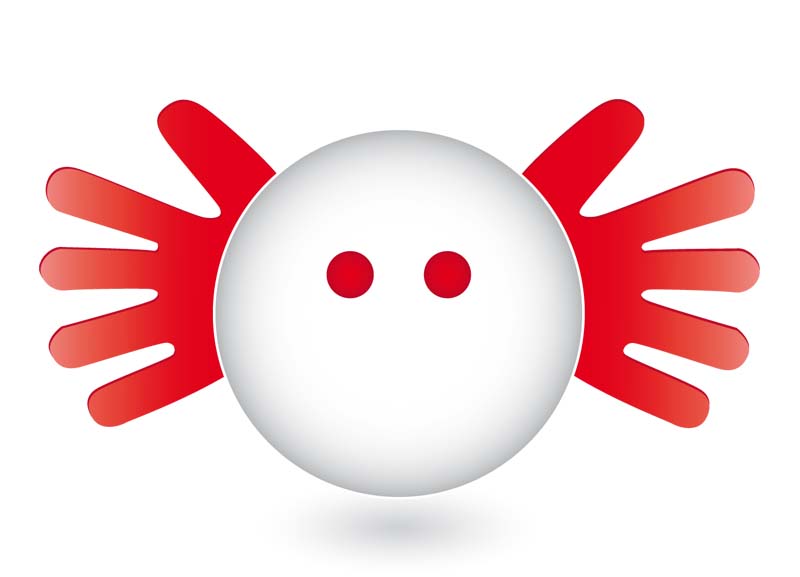

Because the product should be persuasive, and persuasion works best with social incentives, I decided to dive deeper into the animated robot direction. I wanted to give the impression there would be someone driving along with my novice drivers, there to help if needed.

I went ahead with a dashboard-mounted persuasive robot linked up to a TomTom product. This robot warns ahead of potentially dangerous situations and gives the young driver feedback on performance. Indeed, prior research suggests people can be persuaded to behave in a safer way because of such ‘social’ feedback.

User feedback for the robot’s gestures

The robot conveys its messages by making a range of gestures. To keep the design simple and avoid distractions, three behaviours were pursued further: positive for encouragement, negative for discouraging unsafe driving, and alerting to warn for upcoming situations. The actual gestures made were inspired by having a number of young people adjust a wireframe figure to portray certain emotions. Similar fast evaluations were done to inform the visual design.

Form explorations

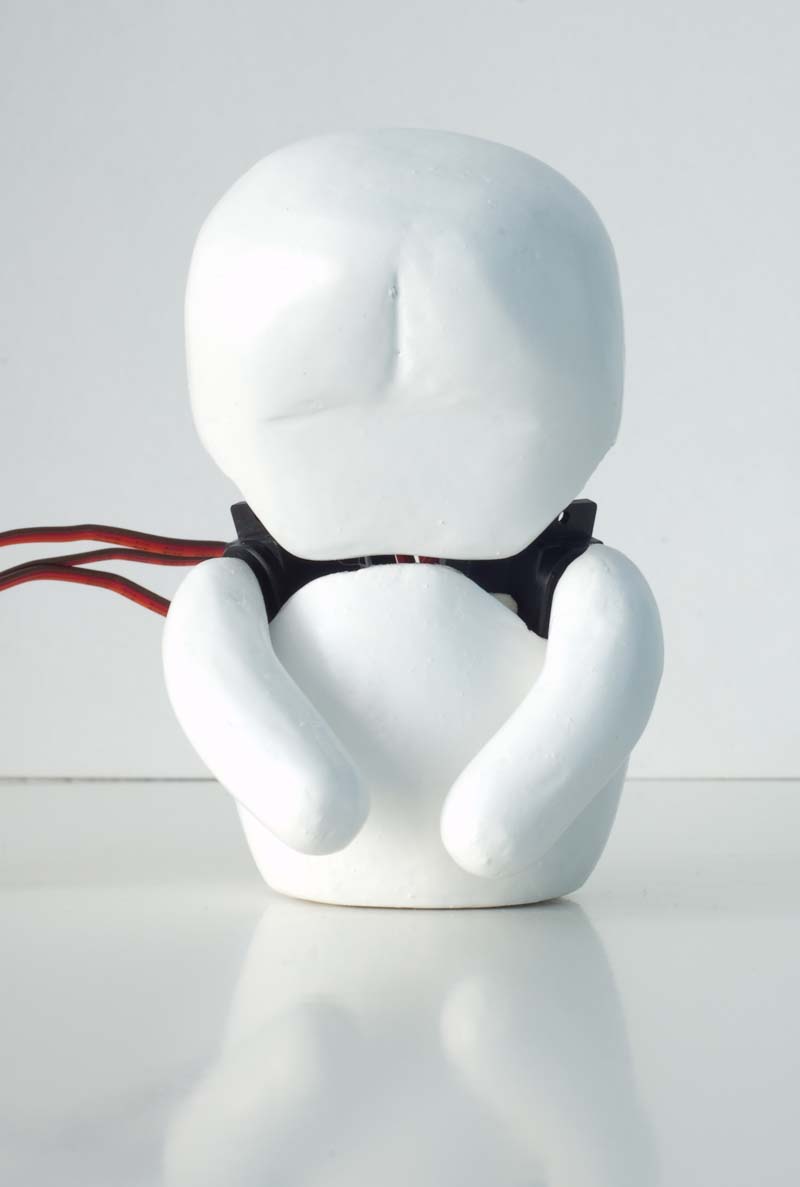

Final design

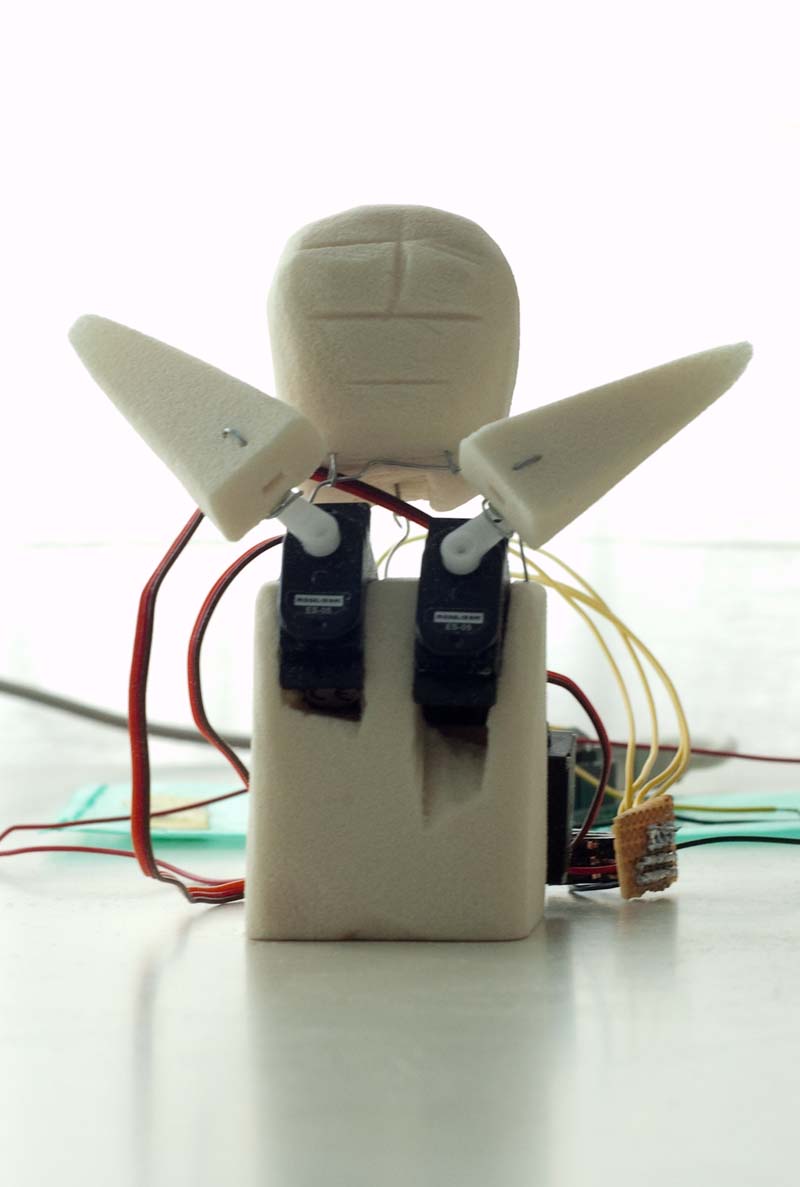

A working prototype of the robot was built, including the ability to move its head and arms. The servo motors under its shell were controlled via an Arduino that I hid in a mock-up TomTom navigation device (see image on top, in the background).

The video below shows the robot in action, in glorious 480p resolution. It was all we had, kids ;)

Thesis report

The final report, documenting the process and results in greater detail, is available online.

Reflection twelve years on

Looking back on this in 2022, I can see there’s room for improvement on most aspects but what stands out:

- The project lacked an ongoing user evaluation process, so I’m not sure if young drivers would actually like this kind of intervention.

- Especially the alerting movement is grabbing attention but right when attention should be directed outward, so it may work counterproductive.

- Other ways of conveying info should be considered.

- Today, speech technology or even augmented reality visuals layered on top of the outside world would be viable options.

- I wanted something recognisable as a ‘person,’ so I went with a robot separate from the TomTom device.

- This gave more freedom as a design project but it’s not necessarily a better design.

- Integration should have been considered in more detail.

- The robot looks quite bland and has undesirable gaps. I remember running short on time, but if servo motors had been packaged lower in the abdomen of the robot, gaps could have been avoided.

- Just the head but with animated eyes could have worked just as well as the whole robot body.

- If the eyes were a display, it would enable showing situation-aware information, making it far more useful.

- I should have made a mock-up of the robot in different colours, because white is really bland.